For the purposes of this article: prompts are what you ask AI to do, context is what information it has access to.

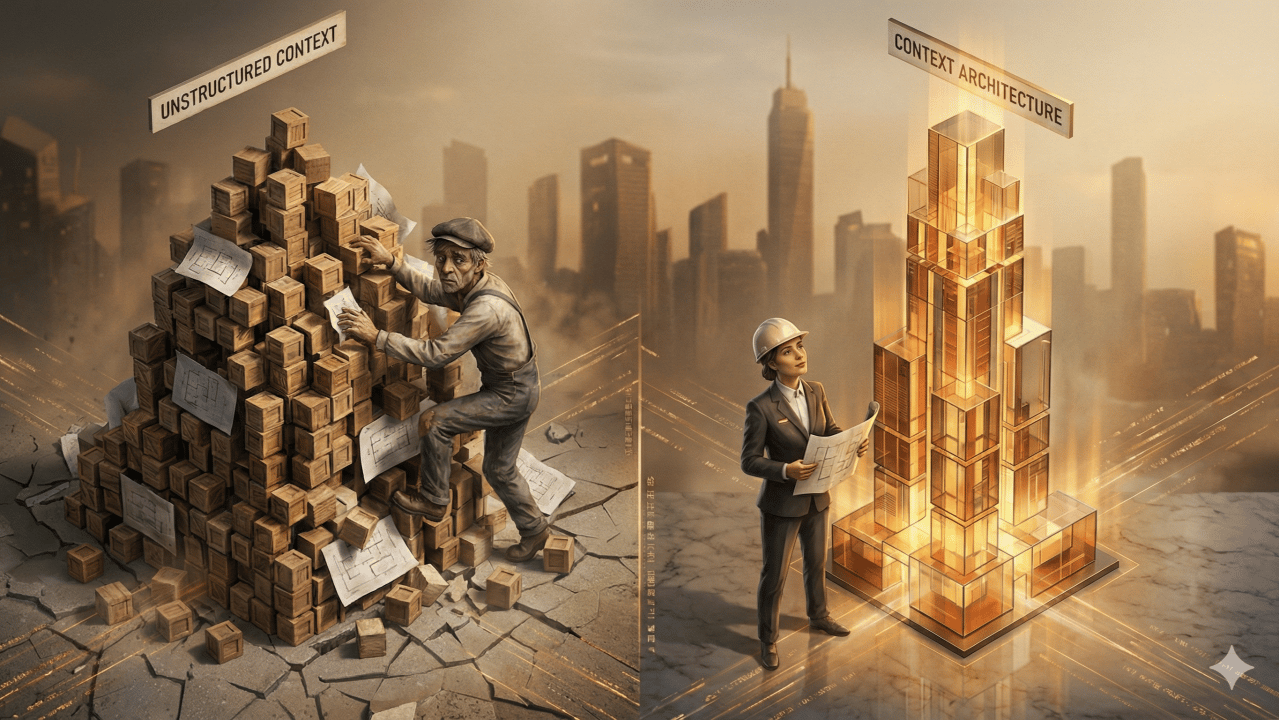

One day ChatGPT nails the tone perfectly. The next day it ignores everything. Most AI advice starts and ends with:

Write better prompts

Load more context (paste it in, upload files, add to GPTs, Projects, Gems)

So people refine prompts, upload brand guidelines into a GPT, add some docs into a Project. Quality goes up, then hits a ceiling.

Aaron Levie wrote:

“The ultimate force multiplier will be the context that the agents get.”

He is right. What gets missed is that it is not about “more context”. It is about giving the AI the right context at the right time.

That needs context infrastructure most people either:

Do not have access to

Have not set up

Do not even realise exists

Nobody really teaches:

The best prompts in the world do not fix terrible context

Why “more context” often makes things worse (context rot)

Context rot in action

That context needs infrastructure if you want intelligence to compound

How to balance too much vs too little

How to move from chat threads to an actual context system

This piece is for non-technical operators who feel they have hit that ceiling. By the end, you should:

Know roughly which stage you are at

See the gaps in that stage

Understand the next structural step, not just “write better prompts”

Context infrastructure in one sentence

Context infrastructure = the filing system, rules, and tools that decide:

What information your AI sees

When it sees it

How that information is stored for next time

Without that, you can only optimise prompts. With it, you can actually scale quality and consistency.

Why “great context management” is the real unlock

Think of hiring a very smart intern who knows nothing about the company. Dumping the entire file server on their desk does not work. Neither does giving them nothing.

Good context management is about balance:

Exactly what they need for the very first task

Where to find more as they progress

Building up their knowledge systematically over time

Most non-technical AI users are never taught that as conversations run longer, the model’s working memory fills with noise: previous searches, tool outputs, false starts, fragments that no longer matter. It is like giving someone directions while they juggle 50 other conversations. Even with perfect instructions, critical details get lost. That is context rot.

Then, when the conversation starts again tomorrow, most web AI tools reset everything. A new chat means a clean slate, or an auto-summary that drops details. Even GPTs, Projects, and Gems do not “remember”. They just run fresh searches each time and pull different fragments from their knowledge base.

This is the plateau most people hit:

Better prompts on average context: ~20% → ~60% quality, then stuck

Better context with decent prompts: ~20% → ~80–85% quality

Everyone obsesses over prompts because it is visible, teachable, and what tutorials focus on. That is useful at the start. Once your prompting is “good enough”, you are optimising the wrong thing.

No amount of prompt engineering fixes bad context. With the wrong information, perfect prompts produce perfectly confident nonsense.

The Five Stages of AI Mastery

I use a five-stage ladder to explain where people are getting stuck, and what needs to change.

Stage 1: One-Off Prompting

Stage 2: Prompt Frameworks

Stage 3: Context Loading

Stage 4: Automated Context Management

Stage 5: Compounding Context Infrastructure

Most non-technical users live in Stage 1 and 2. Some reach Stage 3. A small slice hit Stage 4. Almost nobody outside technical circles is operating at Stage 5.

Think of these stages like a library and a librarian:

Stage 1: walking in and asking for “something good” with no topic

Stage 5: having a librarian who knows your catalogue, remembers yesterday’s work, and hands you complete books instead of random pages

Everything in between is how well you manage that relationship.

Quick self-check: which stage are you at?

Very loosely:

Stage 1: You mostly type into the main chat box. No Projects, GPTs, Gems, or Claude Code.

Stage 2: You have favourite prompts or templates, maybe stored in Notion or a doc, but no real use of files.

Stage 3: You regularly paste long docs or upload PDFs into chats to “give context” each time.

Stage 4: You have at least one GPT / Project / Gem / Copilot with brand guidelines and core docs uploaded. You feel “set up”.

Stage 5: You are using a file-based workspace like Claude Code with projects, folders, and notes that live beyond one chat.

If you are honest, you probably know where you sit.

Now let’s walk the ladder.

Stage 1: One-Off Prompting

You type whatever comes to mind and hope for a good answer.

Vague questions, generic answers

No structure, no context, just conversation

Quality is a lottery (often 20–30%)

Analogy: walking into a library and saying “give me something good” with no topic.

“Write me a blog post” produces templated garbage anyone could write. “Help me with quarterly planning” gets generic advice from public frameworks, nothing specific to your company.

The only thing you really learn here is: “Be more specific”.

Stage 2: Prompt Frameworks

You start using repeatable structures and templates.

Role + Task + Format

TRACE, TAG, RTF

Few-shot examples

Chain of thought reasoning

Quality jumps, and it feels like magic.

Analogy: instead of “something good”, you now ask the librarian for “a business strategy book, 2020–2023, focused on tech startups, with case studies”. That is massively better. The librarian knows which section to search.

But they still do not know your industry, your company, or your situation. So they photocopy chapters from general business books. Good, but generic. Sometimes it fits. Sometimes it misses.

The trap: perfect questions cannot fix missing information. Ask AI to sum sales figures with perfect instructions (“be precise, do not estimate, only return the exact number”) and it still miscounts. It is reading numbers and predicting what the answer should look like, not calculating.

Prompting teaches where to look. The AI still does not have your specific data, frameworks, or reference material.

Most AI tutorials stop here.

Stage 3: Context Loading

Here people realise: “The model needs my world, not just better wording”.

They start deliberately feeding in examples, files, and background so the model understands their business, brand, and processes.

They:

Paste entire brand guidelines

Upload PDFs of frameworks

Add process documents and notes

Quality jumps. Outputs feel sharper and more on brand. Many people stop here and assume AI is “sorted”.

The catch is context dumping: throwing everything at the model at once. PDFs, notes, screenshots, frameworks, past conversations. No filtering. No prioritisation. No structure.

Stage 3 breaks in four ways:

Manual friction: Every session starts from zero. PDFs re-uploaded. Notes re-pasted. Examples re-fed. It feels like onboarding a new intern every single time.

Over-supply of context: Too much information in the window. No hierarchy. No clear signal. The model scans everything and guesses what matters. Results swing from sharp to nonsense.

No visibility into working memory: The context window fills silently. No clear view of token usage, what is active, or what is stale. Context rot kicks in. Earlier instructions get buried under noise.

No persistence: Close the tab. Come back tomorrow. Everything is gone. Whatever the model learned evaporates. Back to manual upload.

Analogy: the librarian finally has the right books, but they sit in a teetering pile on the desk.

Every visit starts with you retelling your story and dragging out the same pile (manual friction).

New books get stacked on top so the important ones are hard to find (over-supply and noise).

No one knows which pages are being photocopied (no visibility).

At closing time the whole pile is cleared away (no persistence).

Stage 3 proves that context matters, but also that context without structure hits diminishing returns fast.

Stage 4: Automated Context Management

Stage 4 feels like everything is finally sorted.

Files live in ChatGPT Projects, Custom GPTs, Claude Projects, or Gemini Gems. You upload once, then let the system “handle the context”.

Stage 3 pain drops

Less copy paste

Fewer giant prompts

Lower chance of blowing the context window in one go

Come back next week and the files are still there

Under the hood, most of this runs on Retrieval Augmented Generation (RAG): the tool stores documents, then searches and pulls back matching fragments each time a question is asked. That is where the ceiling comes from.

The main gaps:

Storage, not memory (still starting from scratch): The system remembers files, not the work. It does not reliably remember which frameworks were used, what decisions were made, or how yesterday’s thread ended. A new session runs fresh searches as if nothing happened.

Fragments, not full documents (no framework): RAG pulls keyword-matched chunks. Ask about strategic planning and it drags in a few paragraphs from different pages instead of the full planning framework. The AI sees pages, not the structure of the book.

Discontinuous, siloed workspace (no compounding): Each GPT, Project, or Gem holds its own slice of context. One island for strategy, another for operations, another for content. There is no single continuous workspace where notes, files, and conversations build on each other over time.

Opaque context window (no control): You cannot see what is actually in the context window, how full it is, or what has been silently summarised away. You cannot set rules for how information is compacted, which notes should be saved, and what should be dropped. The platform controls context management, not you.

Limited tools and rearranging (you still do the heavy lifting): The AI can browse or search, but it cannot reliably restructure your library for you, compact your working notes into a system, or wire in the right tools to manage its own context long term. You still end up renaming files, re-organising folders, and patching gaps by hand.

Analogy:

The librarian has a clearly labelled shelf with your name on it and keeps all “your” books together, so it feels like they know you. In reality they only know where your books are stored, not what you actually worked on last time (no true persistent memory).

When you ask a question, they walk into the library, pick a section, find a book, and quickly photocopy a few pages that match your words. Fast and helpful for quick look-ups, but they never give you the whole book or explain how those pages sit inside a bigger model, so your AI is always working from fragments, not foundations (no complete frameworks, classic RAG).

They mostly operate inside a single wing of the library at a time. Ask a marketing question and they stay in marketing, barely tying it to useful pieces from finance, ops, or strategy. You get local answers that feel smart but do not join into one shared picture (no compounding context).

You have no visibility into how they work. You cannot see which shelves they checked, how many books they skimmed, or how close they are to being overloaded. Pages can fall off the pile and get ignored without you noticing (no context management control).

If the library layout is not working, the librarian will not redesign it for you. You still rename sections, tidy shelves, decide what gets archived, and create your own signposts. The system fetches information faster, but it does not maintain or improve the library on your behalf (you still do the heavy lifting).

It looks sorted. Underneath, it is still retrieval.

Stage 4 is where many “power users” stop. Stage 5 is where things finally start compounding.

Stage 5: Compounding Context Infrastructure

Stage 5 is where progressive disclosure becomes the engine, and tools like Claude Code give it a home.

Instead of RAG grabbing random chunks:

The AI navigates a real file system

Loads full documents and frameworks on demand

Saves useful notes back into that system for next time

Three ideas come together:

Progressive disclosure (how the librarian moves through the library)

File-based context (where knowledge lives and how it is structured)

Compounding memory and tools (how work persists and improves)

Key traits:

Progressive disclosure as the default (structured navigation): The AI does not “search everything” in one go. It starts at the directory, picks the right folder, checks an index or guide, then opens the right file and the right section. Context is revealed step by step, instead of dumping or guessing.

Complete frameworks, not fragments (full book context): Once the right file is identified, the AI can work from the whole thing and understand how pages fit into the broader model or framework. Answers are grounded in structure, not just isolated paragraphs.

Persistent, compounding workspace (keeps and reuses work): In Claude Code-style environments, notes, decisions, and distilled outputs are saved back into the repo. Next time the AI sees both the raw source and the prior notes. Work layers on top of work.

Visible, controllable context (you manage the load): You can see which files are in play, how much context is being used, and when it is time to compact. You can script how notes get summarised and written back. The context window becomes something you design, not a black box.

Tooling wired into the library (less guessing, more doing): Because the AI operates in a code-capable environment, it can pull tools, scripts, and APIs when needed. Read a framework, then run a script to apply it. Read a ledger, then calculate. Read a spec, then generate structured outputs.

Operator-owned filing system: You define folders, indexes, contents pages, and section guides. The library structure is explicit and under your control, not hidden in a vendor’s index.

Analogy:

The librarian starts at the map of the library, walks to the right section, checks the shelf guide, picks the right book, then opens the right chapter (progressive disclosure, not blind searching).

Instead of handing you photocopied pages, they give you the whole book and point out the chapter and model that matter, so every answer sits on a complete framework, not scraps (complete frameworks, not fragments).

As you work, they take neat notes, label them, and file them back into your section of the library. Next time you arrive, those notes are already there alongside the original books, so you always start one step ahead (persistent, compounding workspace).

You can see how full the table is, what books are open, and when it is getting cluttered. You can ask the librarian to summarise three books into one set of notes and shelve the rest (visible, controllable context).

When numbers or structured tasks show up, they grab a calculator, spreadsheet, or database, instead of eyeballing it from a paragraph (tooling wired into the library).

Can GPTs, Gems, or Projects do this?

At a surface level, they can fake parts of it. You can upload a “menu” file with instructions and tell them how to use your documents. Underneath they are still running on RAG: they search, pull fragments, and rebuild answers on the fly.

The same Stage 4 limits stay in place:

Fragments

Reset conversations

No real persistent memory

No explicit filing system you control

These tools live in Stage 4, not Stage 5.

What about Claude Skills?

Skills can help the model follow more advanced workflows, but they are still constrained in web UIs.

In Claude Code, Skills sit on top of:

A real file system

Persistent projects

Visible context

So Skills can:

Read full documents and frameworks, not just chunks

Create and update notes and summaries in the repo

Reuse those notes next time without you re-uploading anything

That is where progressive disclosure, Skills, persistent files, and tools start working together. Over time, the library gets better. Every framework added, every note saved, every refactor of the folder structure improves future answers.

That is context infrastructure.

Quick example: same task, different stages

Take one task: “Build a quarterly planning doc”.

Stage 2: “Act as a strategist. Create a Q2 planning template.” You get a generic outline.

Stage 3: You paste last quarter’s plan, notes, and goals. Better, but you redo that every time.

Stage 4: You upload past plans into a Project / GPT, then ask it to draft Q2. It retrieves some chunks. Quality is decent but hits a ceiling, and you cannot see what it pulled from.

Stage 5: You have a /planning folder in Claude Code with previous plans, a planning framework, and a planning-notes.md file. The AI reads the framework, past plans, and your notes, updates planning-notes.md with this quarter’s decisions, and you reuse that file next quarter. It pulls relevant context from other parts of your filing system based on recent conversation threads earlier today that have been auto-saved as context for this very type of conversation with AI.

Same question. Completely different context infrastructure. Completely different ceiling of quality.

A note on teams, data, and reality

This ladder works at two levels:

Your personal stack

Your team or company stack

None of this means “dump every confidential thing into random tools”. You still need to respect access control, PII, and data policy. The point is not “give AI everything”. The point is “build a deliberate filing system for what AI should see”.

Most organisations today are stuck in shared Stage 2 and Stage 3. They have great prompts and a dumping habit. Almost no one has proper Stage 5 context infrastructure.

Why this actually matters

When Aaron Levie says context is the force multiplier, this is what he is pointing at. The companies that win with AI will not be the ones with slightly better prompts. They will be the ones with better context infrastructure.

Projects, GPTs, and Gems automate uploading and retrieval

Progressive disclosure and file-based workspaces make context management explicit

AI does not need more clever instructions. It needs a better library.

Next time you work with AI, stop and ask:

What stage is my context infrastructure actually at?

What is the smallest move I can make to climb one rung?

Written by Mike ✌

Passionate about all things AI, emerging tech and start-ups, Mike is the Founder of The AI Corner.

Subscribe to The AI Corner