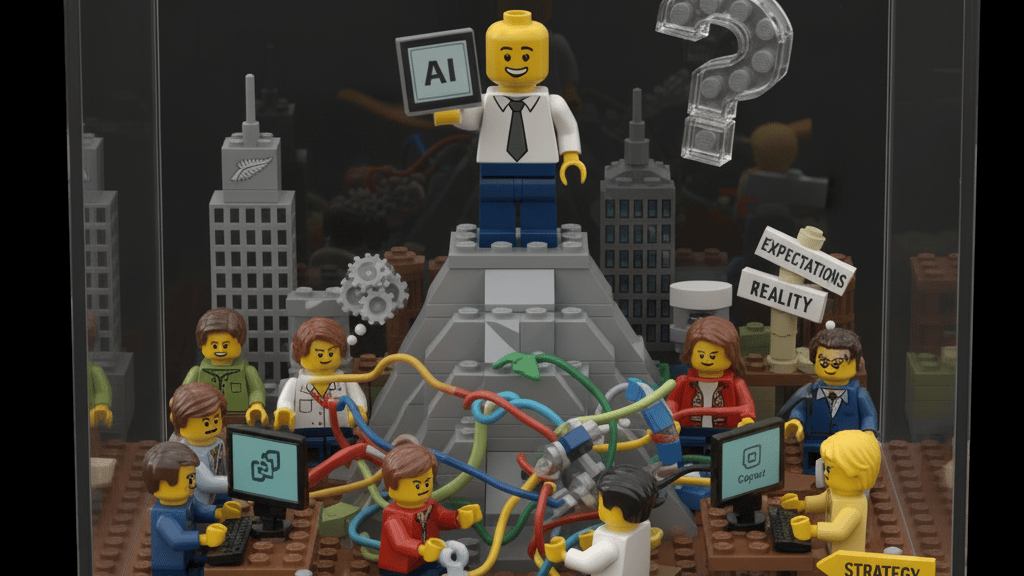

Here's a pattern I'm seeing across Kiwi businesses: the CEO announces "we're implementing AI", IT rolls out Copilot or Gemini because it's already in the Microsoft or Google stack, everyone gets access, the box is ticked, and six months later, nothing meaningful has changed.

Sound familiar?

The problem isn't the tools. These horizontal platforms are genuinely powerful. Superb plumbing that connects apps, exposes APIs, and handles everyday productivity. These tools are a great start to getting going with AI before advanced experimentation can happen.

The problem is what's missing: the strategy that sits above the tools.

Without strategic intent driving deployment, here's what happens: you end up with a busy IT team or AI Working Group building workarounds, half-automated workflows that create as much work as they save, and frustrated end-users who can't make the tool work for their actual jobs. Not to mention the disconnect between executive expectations and the reality of workers who aren't feeling material differences in their work-life balance.

That's the convenience trap that Big Tech unintentionally creates in New Zealand. It's clear from 95% of conversations I'm having with mid-market businesses who don't get the attention of the big consultancies that it's inadvertently stalling AI adoption. Easy access masks the absence of strategic foundation.

Why "Easy and Cheap" Isn't a Strategy

When the CEO and CFO sit down to score AI options, Microsoft or Google inevitably tops the shortlist. Already licensed. Gentle learning curve. Marginal cost almost invisible on the P&L.

Easy. Cheap. Familiar.

But "easy and cheap" isn't a destination. It's an on-ramp.

These tools arrive without requiring any active decision about how you'll use them or what you're trying to achieve. They just appear, like email in 1998 or Teams in 2020. Available, integrated, invisible.

Because there was no active decision about how to use these tools or, most importantly, what outcomes to target, there's no:

Commercial objective these tools are meant to achieve

Capability development to help people use them effectively

Process integration into actual workflows

Governance or measurement of what success looks like

Plan for where freed-up human capacity gets redeployed

Without these foundations, you don't have AI adoption. You have AI access. Those are fundamentally different things. And we'll see a divide develop between those that build an enduring business through structured AI adoption, and another business type that falls into a slow grind of obsolescence.

"But Everyone's Telling Me I Need to Do AI"

The challenge is AI feels impossible to get your head around because everyone describes it at different levels of abstraction, then expects you to synthesise it into coherent strategy.

Your futurist employees talk about "AI Agents". Your IT person talks about "infrastructure". Your consultant talks about "workflows". Your CEO talks about "competitive advantage". Your HR lead talks about "change management".

They're all right. But that's exactly the problem.

AI isn't just a technology deployment of working with Copilot or Gemini. It's five forces happening simultaneously:

A literacy challenge: Understanding what AI is, how it reasons, and where it fails. Knowing the difference between automation (rules and triggers), AI (reasoning and pattern recognition), and agents (goal-driven systems that plan and act).

A workflow redesign: Recognising that much of professional work exists not because humans are good at it, but because we were the only option. AI gives us the chance to rebuild how work gets done.

A capability shift: Understanding that AI doesn't just automate tasks, it changes what skills matter. Analytical work becomes orchestration work. Execution becomes judgment. Following processes becomes handling exceptions. Most of your current workforce isn't equipped for these roles yet, and most managers don't know how to develop these capabilities.

A governance imperative: Confronting the fact that leaders who built the old operating model may not be equipped to redesign it. This forces hard discussions about power, decision rights, and accountability.

A strategic repositioning: Seeing that businesses built around lots of people will struggle against those built around scarce human talent used only where it creates unique value.

Notice technology and data aren't mentioned. They're important, but secondary. These five transformations don't happen in isolation. Your AI Strategy addresses all five simultaneously, connecting literacy → capability → workflow redesign → governance → business model transformation into a unified path forward.

Most businesses deploy AI tools whilst ignoring these transformations. That's why they fail.

The technology is easy. You can deploy Copilot in a week. You can outsource data plumbing. But building organisational change around literacy, capability, workflows and governance takes months of deliberate work, underpinned by robust AI Strategy.

What AI Strategy Actually Means

Strategy is the how and where. A real AI strategy answers these questions before you touch any tools:

What commercial outcome are we targeting? Not "improve productivity", that's too vague. The outcome must connect directly to your P&L through a clear strategic lever: growth (increase pipeline by targeting 40% more opportunities), margin (reduce operational costs by $120K annually), retention (cut churn by 15% through faster support), speed-to-market (launch campaigns in 5 days instead of 3 weeks), or quality (eliminate 80% of errors to improve delivery rates). The target must connect to your P&L.

Which workflows will we transform first? Not everything at once. Pick the workflows where repetitive cognitive work is slowing you down, where accuracy matters, and where the commercial payoff is clear.

What does success look like in 12 months? Specific metrics. Time saved. Revenue influenced. Margin protected. Customer satisfaction improved. If you can't quantify it, you don't have a strategy.

Where will we redeploy the capacity we create? This is the question most executives dodge. If AI makes your team 30% more efficient, what happens to that 30%? New revenue lines? Better customer service? Different roles? Fewer roles? You need an answer before you start, not six months in. This starts at the top and is fundamentally a shifting of how the business operates, impacting everything from your business strategy to your talent strategy.

How will we measure progress along the way? Early stages need different metrics than later stages. Behaviour and fluency first. Process efficiency next. Strategic outcomes along the way with the chunkiest one's focused on across a larger time scale.

Without clear answers to these questions, you're just deploying tools and hoping something good happens.

The Question Every CEO Must Answer

What matters more than which tools you choose: Where will you redeploy your human capital?

Every organisation now faces this choice. These aren't rules, but they help leaders think clearly:

In growth markets, AI creates capacity to expand, but expansion into what matters more than the capacity itself.

When your marketing team can execute 70% faster or your analysts produce insights in minutes instead of hours, you face a strategic choice: do you use that capacity to do more of what you already do (serve more customers, launch more campaigns, enter more deals), or do you use it to do something fundamentally new?

The highest-value play is usually the latter. That "someday" list. The new distribution channel that reaches a different customer segment, the content series that builds authority in an adjacent market, the product launch that's been sitting in the backlog for 18 months. These aren't just nice-to-haves. They're pathways to new revenue streams, market expansion, and competitive differentiation that compound over time.

AI doesn't just make you faster. It makes previously uneconomical business models viable. That D2C channel that never made sense with a 12-person team suddenly works with a 7-person team and AI handling the repetitive work. That's not productivity. That's strategic optionality.

In mature or declining markets, the conversation shifts from optionality to necessity.

If your market is shrinking, AI-driven efficiency isn't just about doing more with less. It's about survival and repositioning. You free up capacity, but you can't just let it sit idle or assume the same business model will absorb it.

There are three potential strategic paths to consider (but not limited to):

Pivot to growth pockets: Redeploy people into the remaining growth segments within your market or adjacent markets where you can still compete effectively.

Build new revenue engines: Use freed capacity to create new lines of business. Services that complement your core, different customer segments, or new delivery models that weren't economical before.

Protect margin through restructuring: If neither growth nor pivot options exist, reduce your cost base to stay competitive. This is the hardest conversation, but sometimes you must restructure your team before the market forces it on less favourable terms.

The strategic question isn't "should we use AI?". It's "what business model do we need to survive and win, and how does AI enable that transformation?".

Leaders who get this right don't just deploy tools or redeploy people. They redesign their business model around where value will be created in the next 3-5 years, then use AI to make that model economically viable with the team they can afford.

Understanding Where You Actually Are (And Which Transformation Deserves Your Focus)

McKinsey's 2025 AI survey shows that almost every company believes they are "doing AI", but two thirds are still stuck in early experiments and only a small minority have reached real scale. Only 6% qualify as true high performers, which means almost everyone is overestimating their actual maturity.

Here is how to diagnose your real stage based on the problems that surface at each level (not exhaustive, but common issue areas).

If shadow AI is everywhere and you can't see what people are using

Your problem is literacy and governance.

Executives feel the pain of paying for Copilot licences while staff run ChatGPT in the browser, share company data into consumer tools and bypass the security perimeter entirely. You cannot manage risk when you do not know where data is going or who is liable if something goes wrong. McKinsey found that 51% of organisations have already experienced at least one negative AI outcome, most commonly inaccuracy, privacy issues or explainability failures. Only a small number have strong mitigation in place.

Focus on shared understanding, clear policies and a common strategy. Measure success by policy adoption, leadership alignment and a reduction in shadow AI as people shift to approved platforms.

If people complain that AI "doesn't work" and adoption is stuck at 10-20%

Your problem is capability development.

Executives invested in AI tools based on vendor promises, but teams struggle to get useful outputs. Productivity has not moved. The board wants ROI figures you cannot provide. People try the tool once, get generic results and return to old processes.

The McKinsey report confirms this pattern. 88% of companies say they use AI, but most of that use is superficial. Only a small fraction see deep adoption across functions. High performers treat capability building as a core investment, not a nice to have.

Focus on structured learning programmes, prompt patterns and hands-on practice. Measure adoption rates, depth of use, time saved and quality improvements.

If automations are scattered across teams with no central visibility

Your problem is workflow redesign.

You have isolated wins but no repeatability. Each team builds its own "magic workflow" and nothing scales. When someone leaves, the knowledge disappears. There is no systematic improvement.

High performers are almost three times more likely to have redesigned workflows for AI. Only twenty three percent of organisations are scaling agents anywhere, which reflects this gap. Most workflows are not ready for end-to-end autonomy yet.

Focus on mapping current processes, standardising patterns and redesigning workflows with AI embedded from the start. Measure cycle time improvements, error reduction, monthly hours saved and standard pattern reuse.

If you are funding pilots with no commercial outcomes

Your problem is strategic positioning.

You are spending money on AI activity without a clear line to revenue, margin, cost reduction or customer outcomes. The board hears experiments rather than business value. Competitors move faster because they focus on commercial outcomes first.

Only 39% of companies report any EBIT impact from AI, and most of that impact is less than five percent. High performers do the opposite. They aim for commercial value early, invest heavily and pursue transformational changes rather than incremental efficiency.

Focus on linking AI directly to P&L levers. Define the commercial outcomes you expect. Map workflows to financial impact and determine how human capital will be redeployed. Measure progress through margin, throughput, revenue, retention and quality gains.

Don't try to run before you can walk

You cannot skip levels.

You fix literacy and governance first as your foundation.

You build individual capability before redesigning workflows.

You redesign workflows before strategic transformation.

This Isn't Microsoft or Google's Fault

Let's be clear: this is not a problem created by Microsoft or Google. They've built genuinely impressive technology and made it widely accessible.

The problem is executives treating tech accessibility as if it solves the transformation challenge. It doesn't. But it requires treating AI as what it actually is: an organisational change, not a deployment of Microsoft Copilot or Gemini Enterprise.

You don't have an AI strategy just because you've rolled out Copilot. You have a checkbox. The strategy is what you do next.

Written by Mike ✌

Passionate about all things AI, emerging tech and start-ups, Mike is the Founder of The AI Corner.

Subscribe to The AI Corner