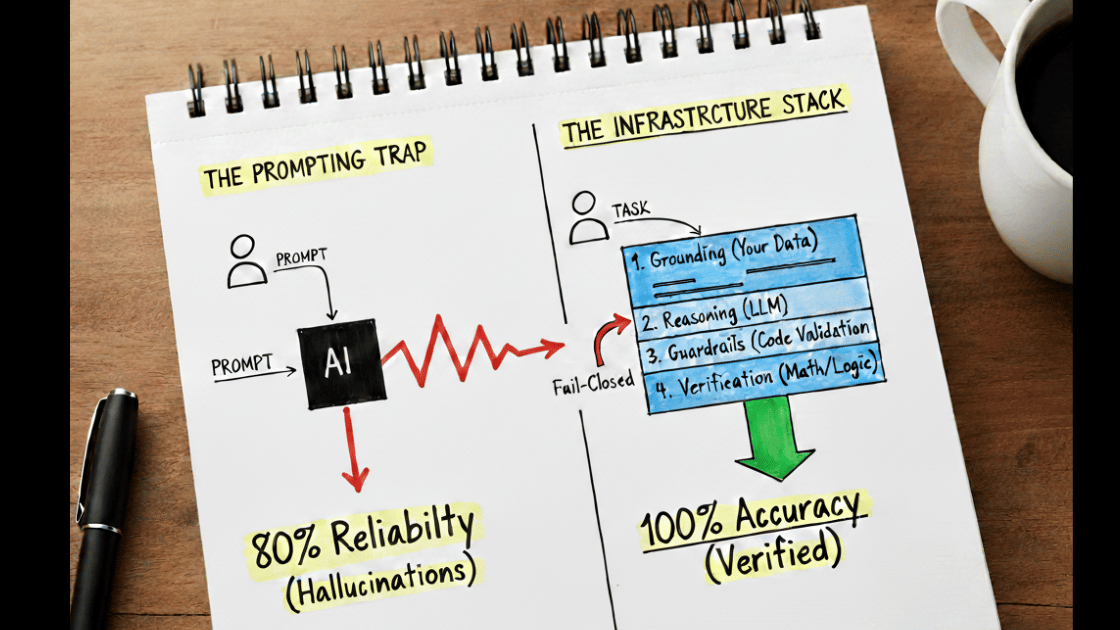

Same Claude model. One team gets hallucinations. Another gets 100% accurate consulting proposals. The difference: one types into a chat window, the other built infrastructure.

That contrast is playing out across every industry right now, and the gap is widening fast.

The $67.4 billion problem businesses struggle with

The numbers are staggering. AI hallucinations cost enterprises $67.4 billion globally in 2024 (Korra, 2024), and a Fortune/MIT study found 95% of generative AI pilots fail to deliver measurable ROI. The primary driver: reliability, not capability.

Even the best available model (Gemini 2.0 Flash) still hallucinates in 0.7% of responses. That means roughly 1 in every 140 outputs contains fabricated information (AllAboutAI, 2026). For mission-critical work, those odds are unacceptable.

The industry's default response has been throwing humans at the problem: 76% of enterprises now require manual review before deploying AI outputs (Drainpipe, 2025). The result is a productivity paradox costing $14,200 per employee annually in hallucination mitigation alone. Technology designed to accelerate work, actually slowing it down.

Better prompts won't fix this. Better architecture is the path.

How I learned this the hard way

Months ago, the AI outputs coming out of my own workflows were inconsistent. Sometimes brilliant, sometimes completely wrong. Same model, same prompts, but with wildly different quality and inconsistency.

The instinct is to blame the technology. But when working with those in the know of how LLMs work and its capabilities, you quickly realise it's a lack of education pulling us towards that conclusion.

Took me longer than I'd like to admit to realise the problem was never the model. It was the absence of anything around the model, and my awareness of what else can be built.

Four layers that changed everything

The fix wasn't better prompts, it was infrastructure. Four layers, built over months of iteration (and a few genuinely terrible first versions):

Context layer. Loading domain-specific files (client data, competitive landscape, historical conversations) so the model generates from verified evidence, not parametric memory. Research shows RAG alone reduces hallucinations by up to 71% (Drainpipe, 2025). Retrieval architecture for the real world, not a clever prompt.

Validation layer. Structured output schemas through Pydantic, Instructor, and Guardrails AI. JSON schema enforcement reduces errors by 70% (Cognitive Today, 2025), and the model literally cannot produce responses that fail business rules. Hallucinated fields get caught and regenerated before a human ever sees them.

Quality layer. Deterministic linting across brand voice, accuracy, and structural integrity. Code-based rules, not probabilistic checks. Pass or fail, automatically. (This layer alone took three rewrites to get right.).

Routing layer. Language models for synthesis and reasoning, retrieval systems for factual accuracy, code execution for calculations. The right tool for each specific subtask, orchestrated by the model itself.

Combined approaches like these achieve 90-96% hallucination reduction in production (NAACL, 2024). No single technique delivers that, it requires the these layers to compound and improve results.

AI Accuracy in Action: Outputs down from days to under an hour

Klarna proved this at scale: 2.3 million customer conversations in the first month, equivalent to 700 full-time agents, running through 40+ guardrails including hallucination prevention, PII detection, and automatic handoff when queries fall outside scope (Klarna, 2024).

ServiceNow achieved a 54% deflection rate on common forms and $5.5 million in annualised savings through RAG-based infrastructure (ServiceNow Research, 2024).

From our own customer base, one example from the organisations we work with and rebuilt for ourselves is in the realm of proposal development. The old process took days of discovery call transcripts, manual analysis, draft writing, multiple review rounds, and formatting. With the infrastructure stack in place, that same process runs in under an hour. The system ingests the call transcript, extracts goals and pain points against a structured schema, loads the client's industry context and competitive landscape, and generates a proposal accurate to the specific conversation. Not a generic template with names swapped in.

95% reduction in development time, with significantly less human involvement in the drafting process. Not because the AI is smarter. Because the infrastructure made it reliable.

Another example, our account intelligence workflow: 15 files, 14 rules, 8 passes

To have an intelligent conversation with a prospect, account research processes need to move beyond a quick website and LinkedIn skim. We've built a system that hits near 100% accuracy of information and alignment to what we need to know about the business.

Today, 15 context files load into my Claude Code before a single word gets generated: client industry, challenges, competitive landscape, previous conversations, among others.

A Python linter enforces 14 auto-fail rule categories across every output.

Eight quality passes before content is considered production-ready.

Same model that "hallucinates" for other teams produces accurate, client-specific work through this infrastructure for our team. The variable was never the model.

Infrastructure compounds. Prompts don't.

The same pattern applies everywhere these organisations are building: content systems that compound intelligence week over week, data pipelines surfacing insights humans can't identify at scale, brand voice enforcement running automatically across every output. Not experimental. Production-grade.

Every context file added, every validation rule written, every quality gate implemented makes next week's outputs measurably better than this week's. The infrastructure compounds. Prompts don't.

Anthropic's own circuit tracing research (2025) confirmed what builders already knew: hallucinations aren't inherent to language models. They're architectural misfires, circuit-level failures that can be engineered away with the right systems around the model.

For mission-critical activities, inaccurate AI outputs are unacceptable. That's a fact. But it doesn't have to be the reality. The architecture to solve it exists right now.

Get in touch if you have questions about the techniques required to achieve higher levels of accuracy.

This isn't about prompting better. It's about building better.

Written by Mike ✌

Passionate about all things AI, emerging tech and start-ups, Mike is the Founder of The AI Corner.

Subscribe to The AI Corner